A guide on how you can automate adding the flow metric of Work Item Age directly into your issues in Jira using Power Automate…

Context

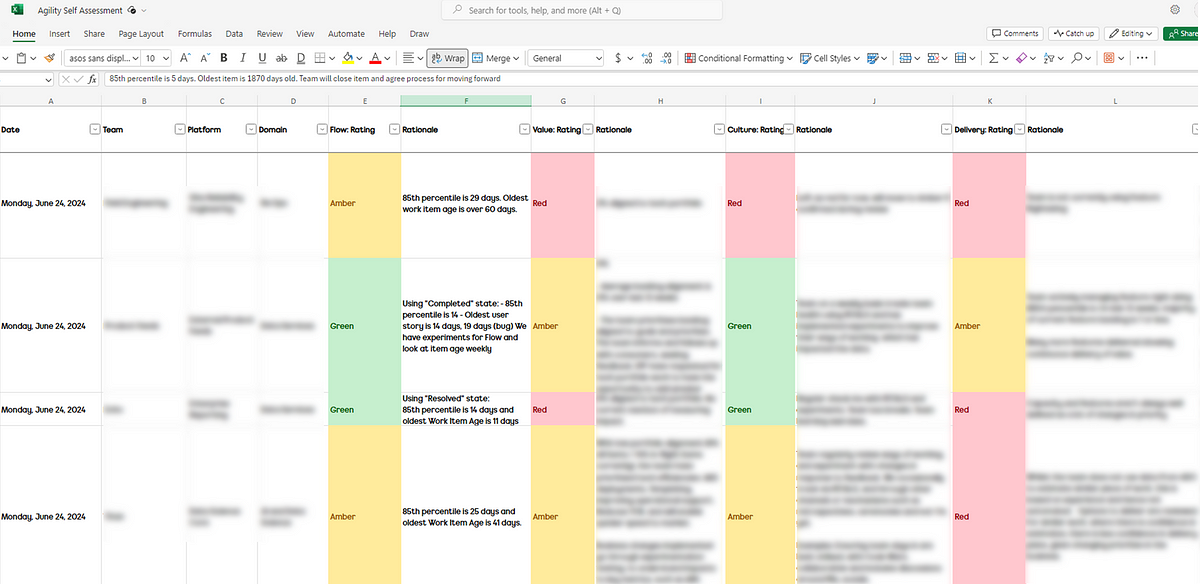

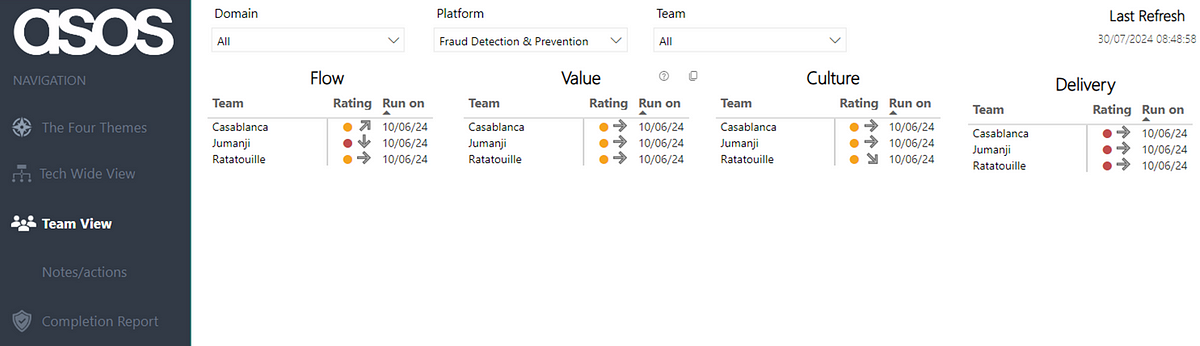

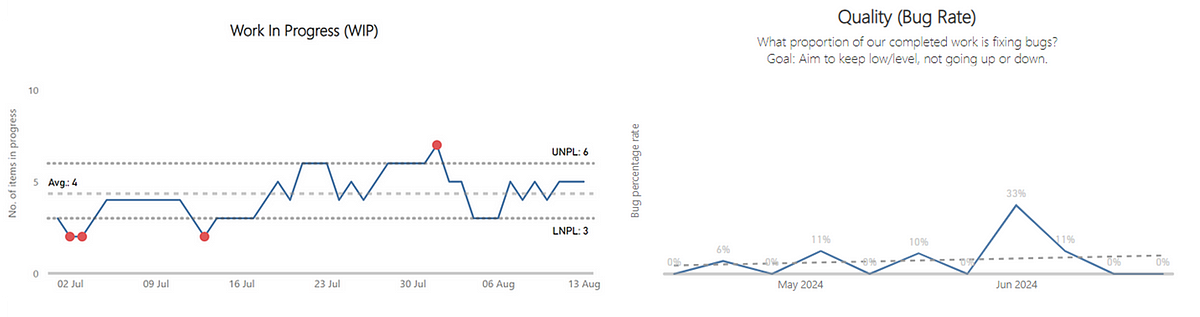

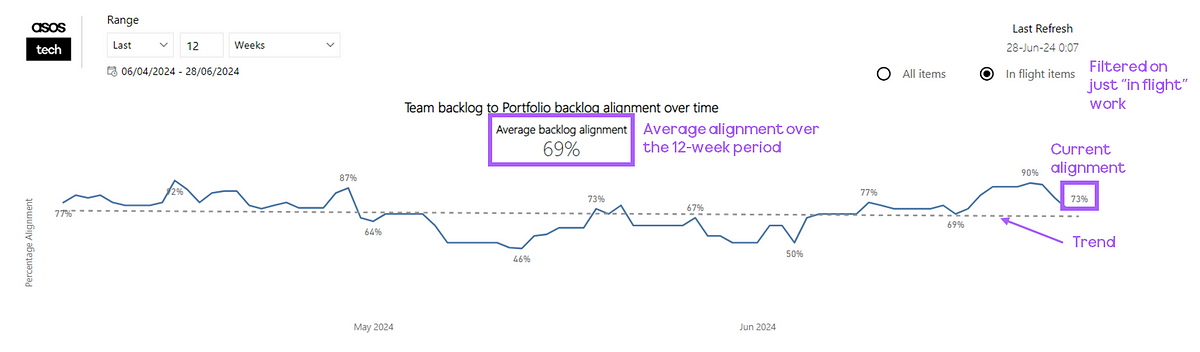

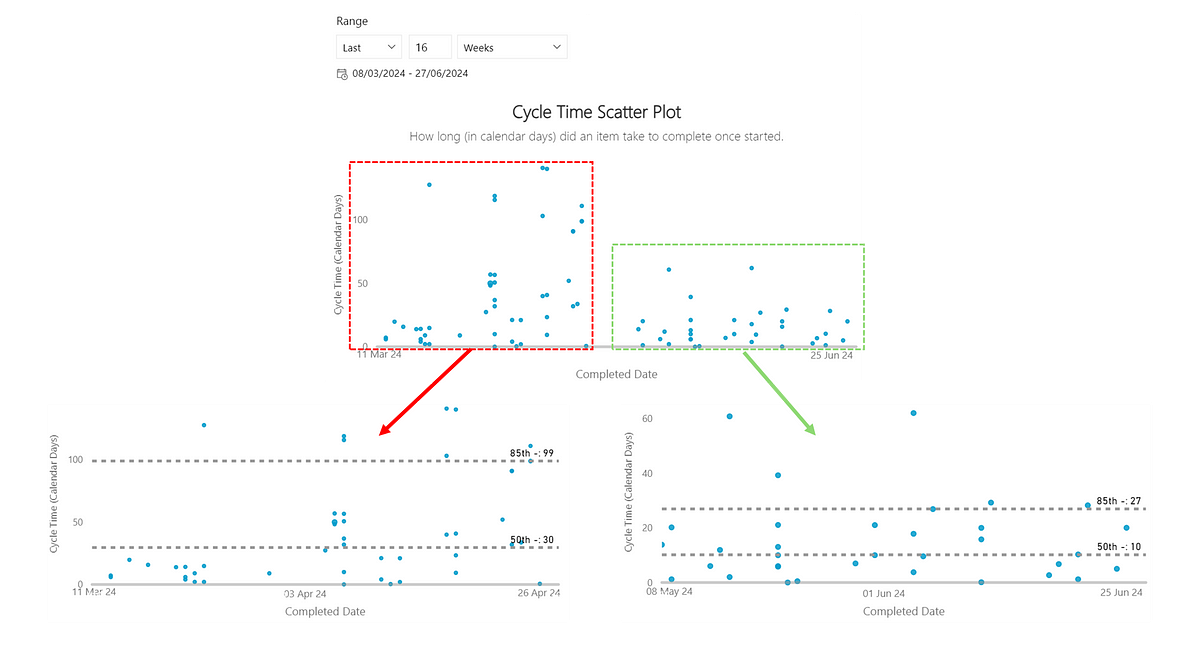

As teams increase their curiosity around their flow of work, making this information as readily available to them is paramount. Flow metrics are the clear go-to as they provide great insights around predictability, responsiveness and just how sustainable a pace a team is working at. There is however, a challenge with getting teams to frequently use them. Whilst using them in a retrospective (say looking at outliers on a cycle time scatter plot) is a common practice, it is a lot harder trying to embed this into their every day conversations. There is no doubt these charts add great value but, plenty of teams forget about them in their daily sync/scrums as they will (more often than not) be focused on sharing their Kanban board. They will focus on discussing the items on the board, rather than using a flow metrics chart or dashboard, when it comes to planning for their day. As an Agile Coach, no matter how often I show it and stress the importance of it, plenty of teams that I work with still forget about the “secret sauce” of Work Item Age in their daily sync/scrum as it sits on a different URL/tool.

Example Work Item Age chart

This got me thinking about how to might overcome this and remove a ‘barrier to entry’ around flow. Thankfully, automation tools can help. We can use tools like Power Automate, combined with Jira’s REST API, to help improve the way teams work through making flow data visible…

Prerequisites

There are a few assumptions made in this series of posts:

Within Jira you have access to (or know someone who has access to) add custom fields to a project

You know how to create (and have created) an API token in Jira

Statuses/status categories in your Jira project are configured correctly

You have access to Power Automate and can create premium flows

With all those in place — let’s get started!

Adding a Work Item Age field in Jira

The first thing we need to do is add a custom field for Work Item Age. To do this, navigate to your Jira project you want to add this in. Click on ‘Project settings’ then choose a respective issue type (in this case we’re just choosing Story to keep it simple). Choose ‘Number’ and give the field the name of Work Item Age (Days) and add the description of what this is:

Once done, click ‘Save Changes’. If you want to add it for any other issue types, be sure to do so.

Finding out the name of this field

This is one of the trickier parts of this setup. When using the Jira REST API, custom fields do not give any indication as to what they refer to in their naming, simply going by ‘CustomField_12345’. So we have to figure out what our custom field for work item age is.

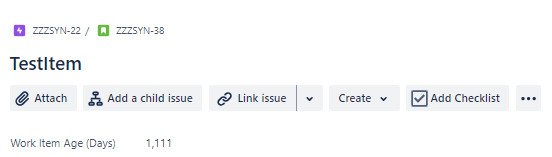

To do so, (edit: after posting, Caidyrn Roder pointed me to this article) populate a dummy item with a unique work item age, like I have done here with the value 1111:

Next, use the API to query that specific item and do a CTRL + F till you find that value, it will look similar to the below, just change the parts I’ve indicated you need to replace:

https://[ReplaceWithYourJiraInstanceName].atlassian.net/rest/api/2/search?jql=key%20%3D%20[ReplaceWithTheKeyOfTheItem]

My example is:

https://nickbtest.atlassian.net/rest/api/2/search?jql=key%20%3D%20ZZZSYN-38Which we can do a quick CTRL + F for this value:

We can see that our Work Item Age field is called — customfield_12259. This will be different for you in your Jira instance! Once you have it, note it down as we’re going to need it later…

Understanding how Work Item Age is to be calculated

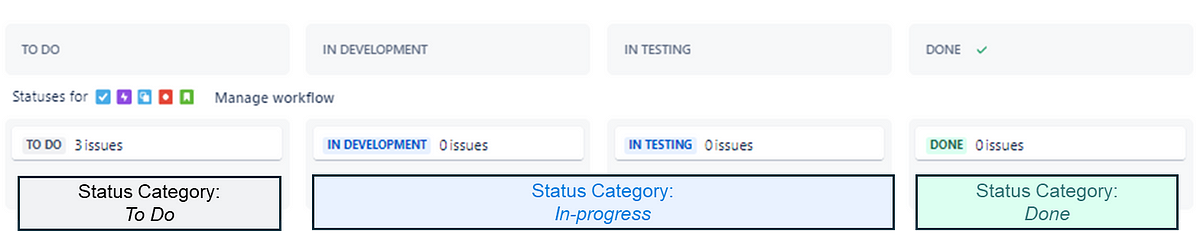

When it comes to Jira, you have statuses that are respective to a particular workflow. These will be statuses items move through and also map to columns on your board. Status categories are something not many folks are aware of. These are essentially ‘containers’ for where statuses sit. Thankfully, there are only three — To do, In Progress and Done. These are visible when adding statuses in your workflow:

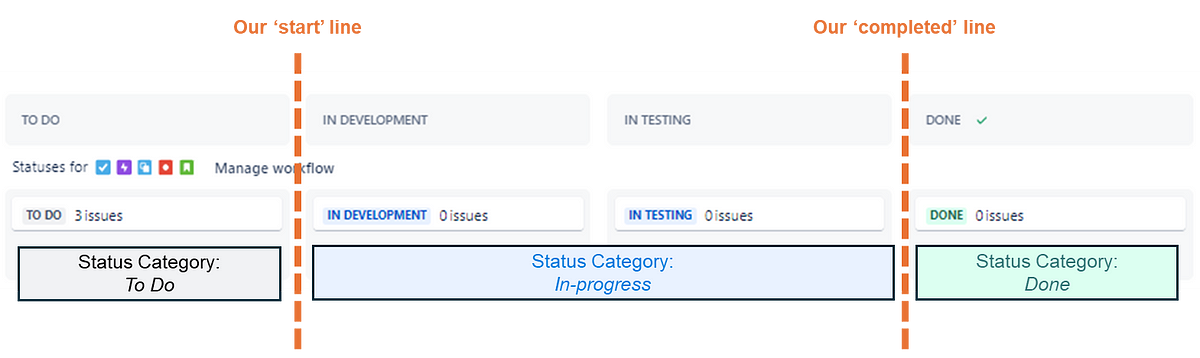

An easier to understand visual representation of it on a kanban board would be:

What’s also helpful is that Jira doesn’t just create a timestamp when an item changes Status, it also does it when it changes Status Category. Therefore we can use this to relatively easily figure out our Work Item Age. Work Item Age is ‘the amount of elapsed time between when a work item started and the current time’. We can think of ‘started’ as being the equivalent to when an item moved ‘In Progress’ — we can thus use our StatusCategoryChangedDate as our start time, the key thing that we need to calculate Work Item Age:

Automating Work Item Age

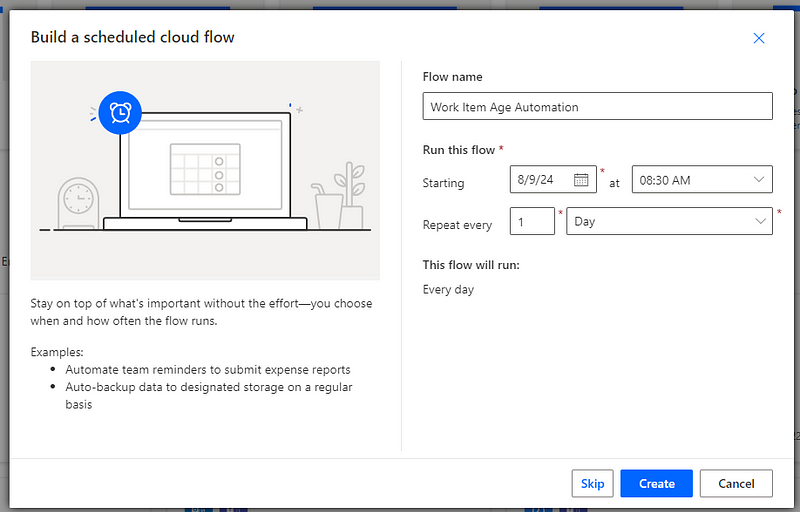

First we need to setup the schedule for our flow to run. To do this you would navigate to Power Automate and create a ‘Scheduled cloud flow’:

The timing of this is entirely up to you but my tip would be to do be before the daily stand-up/sync. Once you’re happy with it give it a name and click ‘Create’:

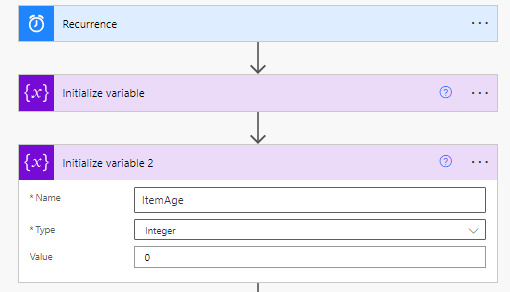

Following this we are going to add a step to Initialize variable — this is essentially where we will ‘store’ what our Issue Key is in a format that Power Automate needs it to be in (an array) with a value of ‘[]’:

We are then going to add the Initialize variable step again— this time so we ‘store’ what our Work Item Age is which, to start with, will be an integer with a value of 0:

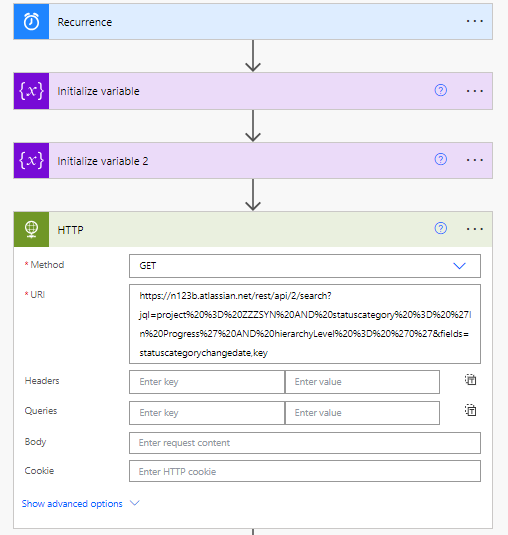

After this, we’re going to add a HTTP step. This is where we are going to GET all our ‘In Progress’ issues and the date they first went ‘In Progress’, referred to in the API as the StatusCategoryChangedDate. You’ll also notice here I am filtering on the hierarchy level of story (hierarchy level = 0 in JQL world) — this is just for simplicity reasons and can be removed if you want to do this at multiple backlog hierarchy levels:

https://[ReplaceWithYourJiraInstanceName].atlassian.net/rest/api/2/search?jql=project%20%3D%20[ReplaceWithYourJiraProjectName]%20AND%20statuscategory%20%3D%20%27In%20Progress%27%20AND%20hierarchyLevel%20%3D%20%270%27&fields=statuscategorychangedate,key My example: https://n123b.atlassian.net/rest/api/2/search?jql=project%20%3D%20ZZZSYN%20AND%20statuscategory%20%3D%20%27In%20Progress%27%20AND%20hierarchyLevel%20%3D%20%270%27&fields=statuscategorychangedate,key

You will then need to click ‘Show advanced options’ to add in your API token details. Set the authentication to ‘Basic’, add in the username of the email address associated with your Jira instance and paste your API token into the password field:

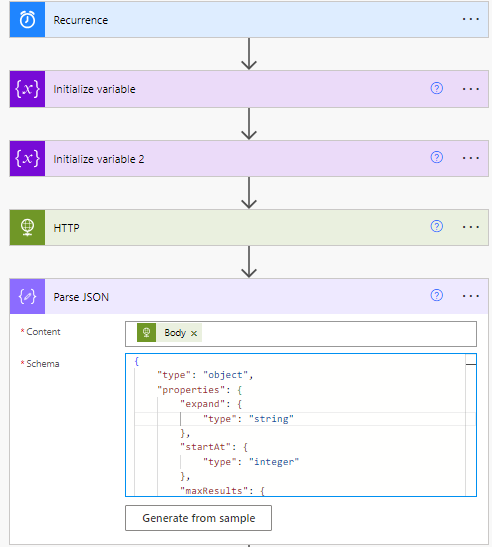

Then we will add a PARSE JSON step to format the response. Choose ‘body’ as the content and add a schema like so:

{

"type": "object",

"properties": {

"expand": {

"type": "string"

},

"startAt": {

"type": "integer"

},

"maxResults": {

"type": "integer"

},

"total": {

"type": "integer"

},

"issues": {

"type": "array",

"items": {

"type": "object",

"properties": {

"expand": {

"type": "string"

},

"id": {

"type": "string"

},

"self": {

"type": "string"

},

"key": {

"type": "string"

},

"fields": {

"type": "object",

"properties": {

"statuscategorychangedate": {

"type": "string"

}

}

}

},

"required": [

"expand",

"id",

"self",

"key",

"fields"

]

}

}

}

}Then we need to add an Apply to each step and select the ‘issues’ value from our previous PARSE JSON step:

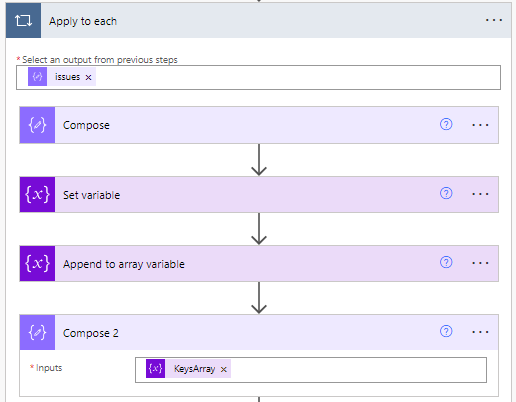

Then we’re going to add a Compose action — this is where we’ll calculate the Work Item Age based on todays date and the StatusCategoryChangedDate, which is done as an expression:

div(sub(ticks(utcNow()), ticks(items('Apply_to_each')?['fields']?['statuscategorychangedate'])), 864000000000)Next we’ll add a Set Variable action to use the dynamic content outputs from the previous step for our ItemAge variable:

Then add an Append to array variable action where we’re going to append ‘key’ to our ‘KeysArray’:

Then we’ll add another Compose action where we’ll include our new KeysArray:

Then we’re going to add another Apply to each step with the outputs of our second compose as the starting point:

Then we’re going to choose the Jira Edit Issue (V2) action which we will populate with our Jira Instance (choose ‘Custom’ then just copy/paste it in), ‘key’ from our apply to each step in the Issue ID or Key field and then finally in our ‘fields’ we will add the following:

Where ItemAge is your variable from the previous steps.

Once that is done, your flow is ready — click ‘Save’ and give it a test. You will be able to see in the flow run history if it has successfully completed.

Then you should have the Work Item Age visible on the issue page:

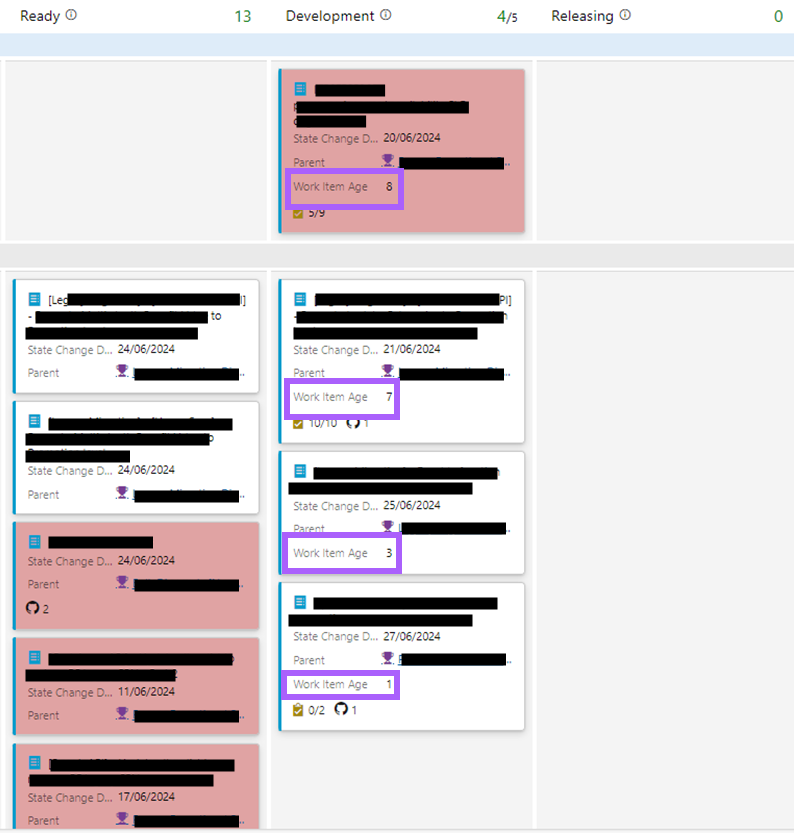

Depending on your Jira setup, you could then configure the kanban board to also display this:

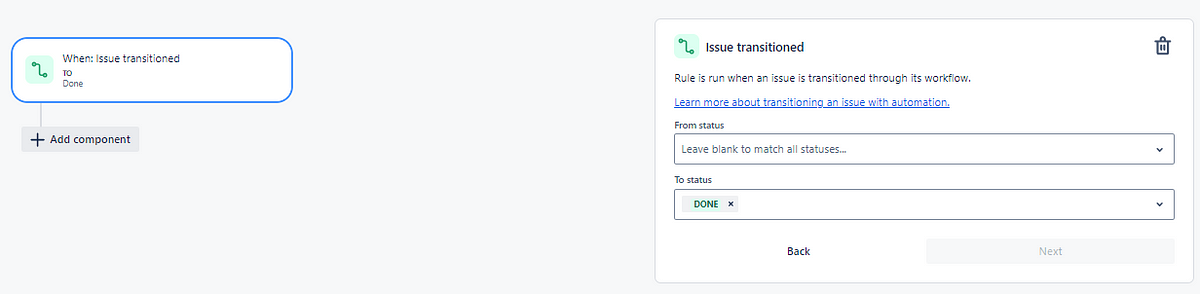

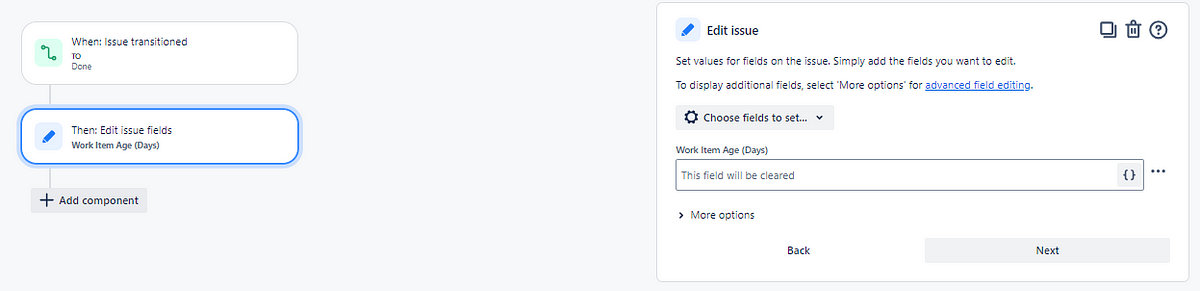

Finally, we want to make sure that the work item age field is cleared any time an item moves to ‘Done’ (or whatever your done statuses are). To do this go to the Project Settings > Automation then setup a rule like so:

That way Work Item Age will no longer be populated for completed items, as they are no longer ‘in progress’.

Hopefully this is a useful starting point for increasing awareness of Work Item Age on issues/the Jira board for your team :)